Distinguishing real from invented problems with the NIH

How does the NIH work and where does it work well?

Full disclosure: I am an NIH funded investigator.

The incoming administration has pledged, for a second time, to take a sledgehammer to the institutions of the federal government and beyond. This includes the National Institutes of Health, an agency that has funded some of the most important clinical research and technology development of the past century. I personally think the most likely outcome of the next four years is a tax cut for wealthy elites like myself, a carve-out for our Teslas, and some directionless chaos. But there is a chance the chaos will end up directed at the institution I see as one of America’s crown jewels, so it is important to be clear-eyed about what the NIH actually does and where there are genuine opportunities for reform.

What does the NIH actually do?

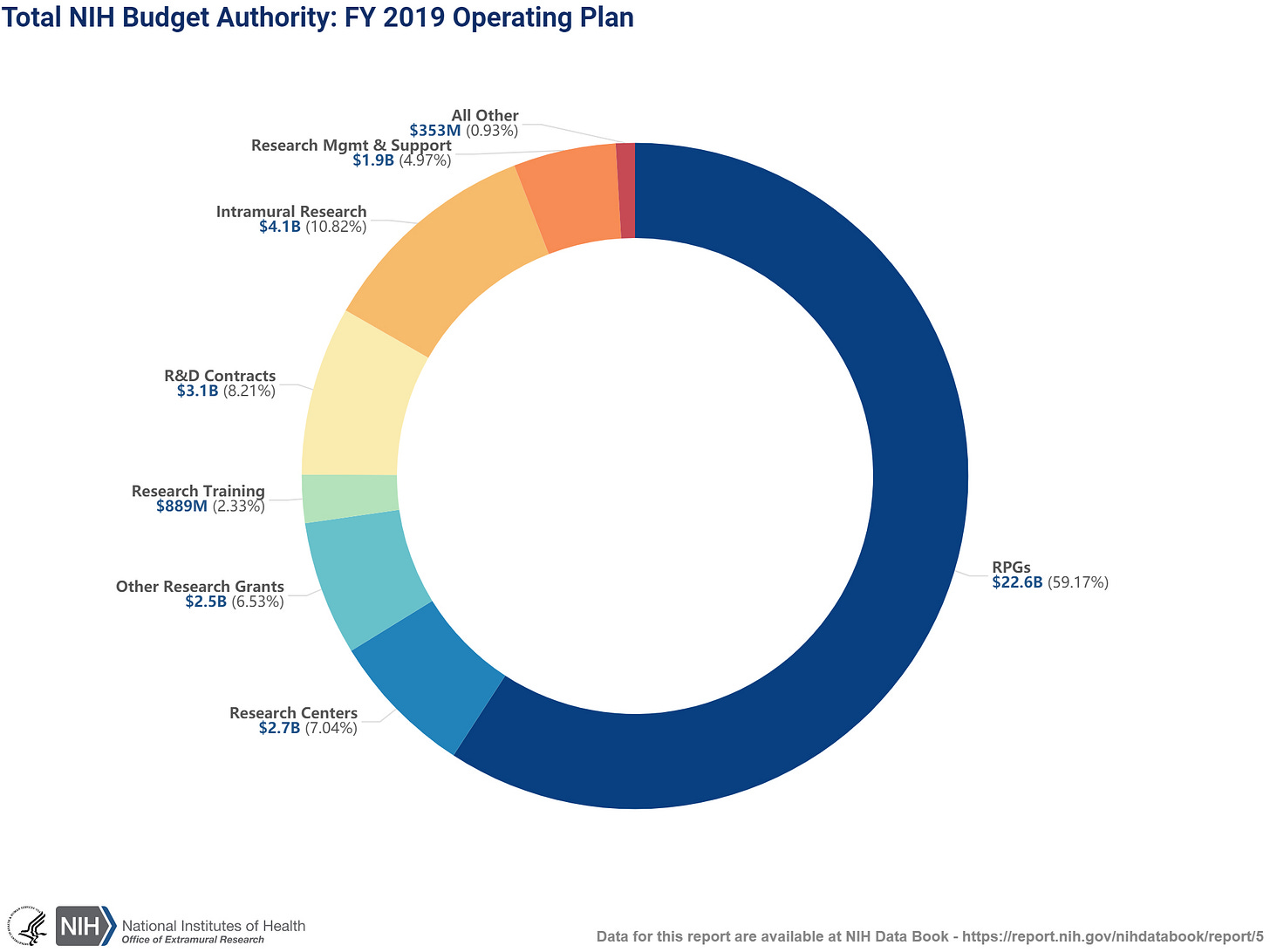

In a nutshell, the NIH funds research on health and disease. In 2019, 60% of the NIH budget went to research project grants (abbreviated, somewhat aggressively, as “RPGs” in the figure below) and another 35% went to research that was either happening at the NIH itself (“intramural”), through R&D contracts, in specialized research centers, or as training. What’s left? 6% for management, support, and other expenses. Research is far and away the largest component.

To understand how this money gets distributed, let’s focus on the RPGs which form the single biggest chunk of research funding. The NIH review process itself is interesting and mostly unknown to the public. Each year, the NIH issues multiple calls or requests for RPGs with essentially no prerequisite other than “fall[ing] within the mission of the participating institutes” and coming from an eligible investigator and institution. Investigators submit their proposals, which are then directed to a “study section”, a topic-specific group consisting of other researchers, which meet several times a year to review and score the submitted proposals. In general, study section members themselves previously received a grant through the corresponding study section, and volunteer either for a fixed term or on an ad hoc basis (serving on a study section is seen as prestigious and comes with some small administrative perks, but is otherwise not mandatory or compensated). Each study section consists of several dozen members and the roster is made publicly available, making the review process quasi-anonymous: investigators are aware of who was in the discussion but not which specific members reviewed their proposal.

In advance of the study section meeting, three members are assigned to read and score each submitted proposal based on (as of 2025) the importance and rigor/feasibility of the work. In this way, every proposal has to advocate that it is scientifically significant, innovative, and that the approach is sound. Finally, the study section meets to discuss the proposals, typically in a hotel/office in Bethesda over the course of several grueling days. For each proposal, the assigned reviewers present/summarize the material and explain their scores, which are then used to set the scoring “range”. The other section members can ask questions or raise concerns, a discussion takes place, and then every section member enters their score. This final scoring process is again anonymous, unless section members want to vote “out of range”, in which case they have to declare their intention to the study section. This little ritual adds another layer of quasi-anonymous accountability, so that someone who is regularly deviating from the range set by the appointed reviewers is making themselves known to the study section. Finally, the scores for each proposal are averaged across the entire section to get the final score and ranking. Thus, the scientific merit of proposals is evaluated by a panel of other scientists with subject-matter expertise.

What happens between scoring and funding is a bit more opaque. Generally speaking, each proposal is assigned to one of the NIH institutes when it is submitted, and these institutes actually make the funding decisions. The institutes and the study sections are independent but correlated: for example, the Cancer Genetics study section will likely be reviewing proposals that end up being funded by the National Cancer Institute; whereas the Genetics of Health and Disease study section could review proposals that are funded by a variety of Institutes depending on whether the focus. Typically, any proposals scoring better than an institute-specific “pay line” are automatically recommended for funding, with the remaining top scoring grants left to the discretion of the NIH officers and their budget. This creates a boundary between the study section, where the science is evaluated for merit, and the funding body, where the scored proposal is considered for funding based on that evaluation. It also means that some proposals in a given study section may not be funded even though they had better scores, because they were submitted to an institute with a lower pay line. The current pay lines are in the ~10% range, meaning that 90% of proposals do not get funded on the first round of review; overall success rates are in the ~20% range, meaning that 80% of proposals do not get funded at all.

There are a few places where the NIH can nudge the process into a desired direction. First, in an effort to fund younger and more junior researchers, the NIH provides an Early Stage Investigator (ESI) “bonus” to young, first time grantees which increases their pay line. All the newbie proposals also get discussed together, in an attempt to manage appropriate expectations before going to the submissions from tenured dinosaurs. Second, a fraction of proposals that score in a gray area (high scoring but do not cross the pay line), can be recommend for funding by NIH staff based on some (largely mysterious) internal criteria of merit. Third, the NIH can officially set aside some funding for specific research topics that are of high priority to a given institute or for specific types of investigators (physicians transitioning into computational research or vice versa, new postdoctoral fellows, small “high risk” research, etc). Anecdotally, these set-asides seem to play a small role in the budget. Fourth, and perhaps most under-appreciated, the NIH can tell reviewers how to score the proposals — i.e. drive the reviewer culture — by defining the scoring criteria and reminding reviewers on what they should or should not consider as important. Reviewers are, ultimately, free to do what they please, but humans tend to be obedient creatures and such nudges can add up to meaningfully shape the way science is evaluated over the long term.

In short:

The vast majority of the NIH budget goes to funding scientific proposals.

All proposals have to justify in detail why they are scientifically significant, in addition to being feasible.

Proposals are reviewed and scored by other scientists in a competitive, quasi-anonymous discussion.

80% of proposals never get funded, and this number has steadily grown over time.

The NIH exerts influence by setting the funding priorities and can apply nudges to proposals that get a borderline score or by driving the culture of the review process itself.

What are some real problems?

The NIH is currently very good at funding research that has led to high impact papers. I say “has led” because it is not always clear whether the funding supports the work or the work was retroactively ascribed to the funding. But grab an important recent paper and you can pretty much bet there will be an NIH grant in the acknowledgements. And if you talk to individual researchers, you will hardly ever hear someone complain that it is too easy to get their work funded. So what are the problems?

Reviewer scores often do not predict success

A fundamental issue in the process is that reviewer scores do not seem to be good predictors of the eventual impact of a funded project beyond a basic level of feasibility. Several studies have now looked at this, for example Fang et al. (2016) re-evaluated >100,000 funded grants and found essentially no correlation (r2 = 0.0078) between the grant score and the number of publications or citations resulting from the award, concluding that:

despite the overall ability of reviewers to discriminate between extremely strong grant applications and the remainder, they have limited ability to accurately predict future productivity of meritorious applications in the range relevant to current paylines … The data also suggest that current paylines are inadequate to fund the most productive applications and that considerable potential productivity is being left on the table at current funding levels

This is not a perfect analysis because all of the grants did get funding, which means they passed someone’s criteria for merit and are range restricted. But it does suggest that the difference between “fundable and good” and “fundable and excellent” is mostly meaningless, even though this is exactly where much of the study section discussions take place. Beyond wasted reviewer effort, this also has some subtle consequences on investigators. Proposals that land in a fundable range can be revised and resubmitted for a second evaluation; and investigators typically sweat every detail of these revisions in the hopes of dragging the proposal over the finish line, even though they are responding to feedback that is largely arbitrary. This is a waste of substantial researcher effort and may even lower the quality of the resulting science. Over the course of many submissions, this pointless back-and-forth likely has a gradually corrosive effect on the culture around funding. Investigators get disillusioned and burnt out or become cynical, either way they see their relationship with the NIH as dysfunctional and capricious.

Various creative approaches have been proposed to deal with the poor predictability of grant scores. Most notable is the use of lotteries, whereby grants are initially evaluated for qualitative merit, and then a subset is selected for funding randomly. Such a random allocation scheme has been in place in New Zealand since 2013 and has not, it seems, generated substantial backlash.

Few mechanisms for genuinely high risk projects

In principle the NIH has several mechanisms that are intended for risky proposals (such as the “R21 Exploratory/Developmental Research Grant Program” intended for small “exploratory” proposals) or risky investigators (such as the “Katz Early Stage Investigator Research Project Grant”, which does not allow any preliminary work to be submitted). The problem is reviewers have developed certain expectations about the review process, in particular that proposals should contain a sizable amount of “preliminary work” demonstrating feasibility. Slapping the words “Exploratory” or “Innovative” on the title of the grant does not change reviewer habits. As a result, R21s have incredibly low success rates and often seem to require even more preliminary work than a regular proposal. The Katz is fairly new, but also appears to be converging on a reviewer expectation of (implicit) preliminary work even though this is explicitly against the terms of the proposal. These reviewer expectations incentivize proposals that are incremental or “propose” a project that is already nearly complete in the hopes of using the funding on research investigator is actually interested in — effectively reversing the funding paradigm.

… and few mechanisms for important low risk projects

And yet, the NIH often underfunds important low risk projects. A fundamental need in many fields is the generation of large scale data and systematic benchmarking that would be outside the capacity or interest of a single lab. For example, thoroughly evaluating the same cell line with a dozen different technologies to establish their efficiency and reproducibility. This type of work is critical for the community but does not itself lead to novel discoveries and is thus typically frowned upon by reviewers. Even when the NIH issues calls for large-scale or collaborative efforts, they typically still fall into the structure of a standard grant or a cluster of standard grants, with reviewers expecting a slate of novel discoveries. A related issue plagues the funding of software development: reviewers are eager to fund novel algorithms that promise to solve new problems, but are reluctant to provide funding for the maintenance of existing software, even if that software is widely used. It is not uncommon for labs that host web applications with millions of users to resort to begging for donations just to keep the servers up and running.

Both problems — not enough high risk funding and not enough low risk funding — are fundamentally a misalignment between reviewer expectations and what the field actually needs. Providing more funding for these types of projects is an obvious solution, but it is also important for the NIH to use its study section nudges to tell reviewers what deserves to be scored highly so that they do not fall back on their conservative biases.

Persistent gaps

As I mentioned above, the NIH already provides a bonus to investigators who are within 10 years of their terminal degree and have not yet received a large award; so-called Early Stage Investigators (ESI). The 10 year limit (as opposed to a bonus for any first time proposal) effectively penalizes individuals who, for whatever reason, took time off from research. It is pretty clearly a backdoor bonus for young (wall clock) investigators. And yet, the median age of ESIs receiving a large-scale NIH grant is 39; hardly young. Even with the bonuses, the proportion of awards going to ESIs is still the minority, accounting for just 19% of awards (with new awardees of any kind accounting for 33%). While the NIH could certainly increase the ESI bonus even further this is really pipeline problem, as the number of ESI applicants is itself only 17% (39% for new applicants of any kind):

Beyond age, the NIH also faces diversity gaps. African American applicants were 10% less likely to receive NIH funding than white investigators, even after controlling for multiple factors related to educational background: the so-called “Ginther Gap”, named after the lead author of the seminal Ginther et al. (2011) Science analysis. A 10% difference may not seem like much, but an African American investigator with a 15% success rate will need to write 10 proposals to reach an 80% chance of being funded, whereas a white investigator with a 25% success rate (i.e. 10% higher) needs to write 5.5 — nearly halving the amount of work that is needed to secure independent funding. The source of the gap remains in dispute. Some disparities can be observed earlier: African American PhD students and postdocs publish at roughly the same rate as white students, but their publications tend to net fewer citations, and this citation gap increases substantially when they become investigators. But much of the gap actually appears to be due to funding priorities by the NIH itself, as Donna Ginther summarized in a reflection on her 2011 study:

Subsequent research has found limited evidence of bias in the review process (Forscher, et al., 2019; Erosheva et al., 2020; Nakamura et al., 2021). Hoppe et al. (2019) examined each stage of the NIH review process. They found that African American/Black researchers chose topics that were less likely to receive funding at the stage where proposals are discussed. Wally Schaffer, Laure Haak, and I raised significant concerns about the implications of the Hoppe et al. (2019) study, and we cautioned researchers from changing their research topics. We were not the only ones. On reanalysis, the Lauer et al. (2021) abstract concludes “The lower rate of funding for these topics was primarily due to their assignment to ICs [Institutes or Centers] with lower award rates, not to peer-reviewer preferences.”

This gap could potentially be addressed by prioritizing more funding for NIH institutes that tend to receive applications from minority investigators (though it is worth keeping in mind that investigators follow the money). The number of African American investigators also continues to increase, and finding more avenues for junior faculty to be involved study sections (including unfunded investigators) could help change the implicit priorities in the review process.

The process is slow

It takes roughly a year from the time the proposal is submitted to the time the funding goes out the door. Given that the review process involves a month of pre-reading and two days of discussion, most of that year is thus spent “in the system”. And since ~90% of proposals are not funded in the first round, the typical timeline is actually two years or more. Then, once the proposal is funded, the work has to start more or less immediately. This creates several logistical challenges. First, two years is an enormous amount of time for science, and much of the proposed work will have either been done or needs to be substantially modified. Second, hiring talented people for large-scale projects is hard, and having to do so quickly in response to a stochastic process is even harder. Labs often hire researchers with the expectation/hope that a certain direction will be funded and use discretionary funding or other sources to float them along. This is needlessly precarious for everyone involved, as trainees join a lab not knowing whether their intended area of study will be supported, and investigators hire trainees not knowing whether they will actually be able to do the thing they are good at. I do not have a solution to propose here because, I’ll be honest, I fundamentally do not understand what goes on behind the scenes during that year in review.

And what are some invented problems?

The post-election honeymoon period has also led to a flurry of unserious reform proposals, often exhibiting very little knowledge about the basic function of the institutions they are intended to reform. I do not want to pick on this stuff too much but I think it is useful to counter some of the misguided ideas that are gaining traction.

Some funded grants have silly names

This comes up every time there is a call to cut federal spending: politicians will go out and find some proposals about bugs having sex or monkeys taking drugs and present them as self-evidently wasteful. Of course, research on model organisms is critical to the scientific process and many breakthroughs have come out of exploiting the raw materials mother nature provides us with. That bug sex study might identify a new hormone that leads to the next miracle obesity treatment. The drug monkey study might discover a new pathway for managing addiction. As I noted above, the typical proposal had to advocate for its scientific importance to a study section of several dozen experts and beat out 80-90% of its competitors. There are always exceptions, but it is extremely unlikely that a flood of silly proposals is being funded. And if it is, the problem then lies with the study section and not the proposals themselves.

The proposals have too many pages

A related trick is to itemize the number of pages on a typical grant proposal and argue that length alone is evidence of waste. The last NIH proposal I submitted was about ~150 pages which might indeed seem daunting. But only ~12 pages of that was dedicated to science and will be the focus of study section reviewers (and I can also assure you that I wish I had more than 12 pages to work with). The remainder was some combination of budgets, resumes for all of the personnel involved, descriptions of the data and resources, and contractual language between the NIH and my institution. Nearly all of it was handled by experienced grants administrators in my department who can put these documents together in a matter of hours. The reason the material has to be there is so that reviewers can access it in the rare instances they need to know about a supporting detail, and so that the project can be awarded immediately without modification. Is there room to make this process more streamlined (as well as retaining everyone to the new process)? Probably. Is this a major institutional failure in dire need of reform? No.

Insufficient funding for “taboo” research

Harvard economist Roland Fryer recently argued for research into the genetic causes of health disparities (“If someone finds that health disparities are driven by genetics rather than social factors—that too should be celebrated. We need something like the MacArthur Fellowship or the X Prize for telling the truth about data.”). Ironically, the NIH provides a substantial amount of funding for exactly this purpose, including a slew of programs on the genetics of health disparities, a multi-million dollar grant opportunity for “research into the biological/genetic causes of cancer health disparities”, a center to reduce cancer health disparities, and so on. And this goes beyond cancer. Earlier this year, Nature Neuroscience published a study analyzing genetic ancestry differences in postmortem brains from African American participants; with extensive support from NIH funding. The underlying data was collected as part of a clinical trial also run by the NIH itself. I suspect that Fryer made the common assumption that research into genetic causes of group differences falls afoul of some liberal/political correctness taboo. In reality, this type of research is heavily supported when it is conducted with rigor.

Just burn it down and start fresh

A surprisingly common take is to list some issues with the NIH and then propose to abolish or defund the agency entirely. Let’s take this proposal seriously for a moment and play the tape forward. According to a recent federal report, the NIH supports “300,000 individuals at over 2,500 hospitals, medical schools, universities, and other research institutes in every state in America. Another 10 percent of the NIH’s budget goes to intramural research at NIH-operated facilities, most of which is conducted by the nearly 6,000 NIH physicians and scientists located on the NIH campus”. The NIH also funds about a thousand new clinical trials each year. Defunding these investments would wreak havoc on US research, shut down thousands of clinical labs, terminate ongoing trials, lead to billions in lost economic activity, and put the country at a massive strategic disadvantage with the rest of the world. If we instead take the proposal seriously but not literally, it still requires answering the question: what comes next? Grants are scored by the existing scientific community and the NIH is largely staffed by members of the same. Abolishing the institution and starting a new one would — after tens of billion dollars blown on the transition — simply see it repopulated with the very same people. Moreover, talk to researchers in other countries and you’ll hear many of the same complaints about their funding agencies as ours. All suggesting that what we have now is a kind of natural steady state for scientific funding that, if it is in need of reform, needs to be reformed actively.

What is to be done?

I’ve mentioned a few ways to potentially address the specific challenges above, but it is critical to first define what it is we want the NIH to be doing that it is not currently doing. Should the agency fund more high risk research to tap into fresh ideas that would otherwise not see the light of day? Should it give no-strings-attached money to established investigators to pursue their wildest dreams (i.e. “fund the person not the project”)? Should it encourage the training of more, new, and younger, or minority investigators? Should it focus on simply making funding decisions faster? These are somewhat conflicting goals, so it is important to establish priorities. Funding more established dinosaurs who know how to fast-track a paper into Nature or Science likely means more high-profile work gets published — these people are established for a reason — but it also undercuts the next generation of investigators and genuinely out-of-the-box science. In a few cases, it may also mean big bags of money end up in the pockets of successful fraudsters who have figured out how to play the system (as has recently been alleged about a seminal Alzheimer’s investigator). On the other hand, funding more out-of-the-box science or more newbies means more failed projects and potentially more resentment from the established dinosaurs. Funding more training opportunities generally means fewer or less ambitious projects of any kind, since trainees need to develop their skills gradually and training itself costs money — but with potential long term windfall. In short, reformists need to grapple with the trade-offs and scientists need to make the trade-offs known.

If the NIH was funded by donors, what you're saying would make sense. It's not though- its funded by taxes. Think about this from a middle class perspective. These scientists are elites with fancy titles, extremely high salaries often in the 6 figures, and frequently are protected from changes in the labour market. Furthermore they're usually democrats and ideologically opposed to your lifestyle and culture. Why should this family support a single cent of their tax money going to bug sex research? If it was a voluntary donation, fine. But it's not, so Johnny's Christmas is slightly worse so Madame Scientist at the NIH can afford her Chanel.